When I first started thinking about authentication and authorization in a local-first context I found it super weird and confusing, like I’d stepped through the looking-glass. I found myself wrestling with big philosophical questions like “where does authority ultimately come from?” and “what does it mean to know someone?“.

I’m going to walk you through my journey, from that initial disorientation to what I believe are solid, principled approaches, in the course of developing the @localfirst/auth library.

Why this is weird and confusing

It might not be obvious why this might be disorienting, so I’ll explain.

Without a server, it’s not clear where authority or identity come from.

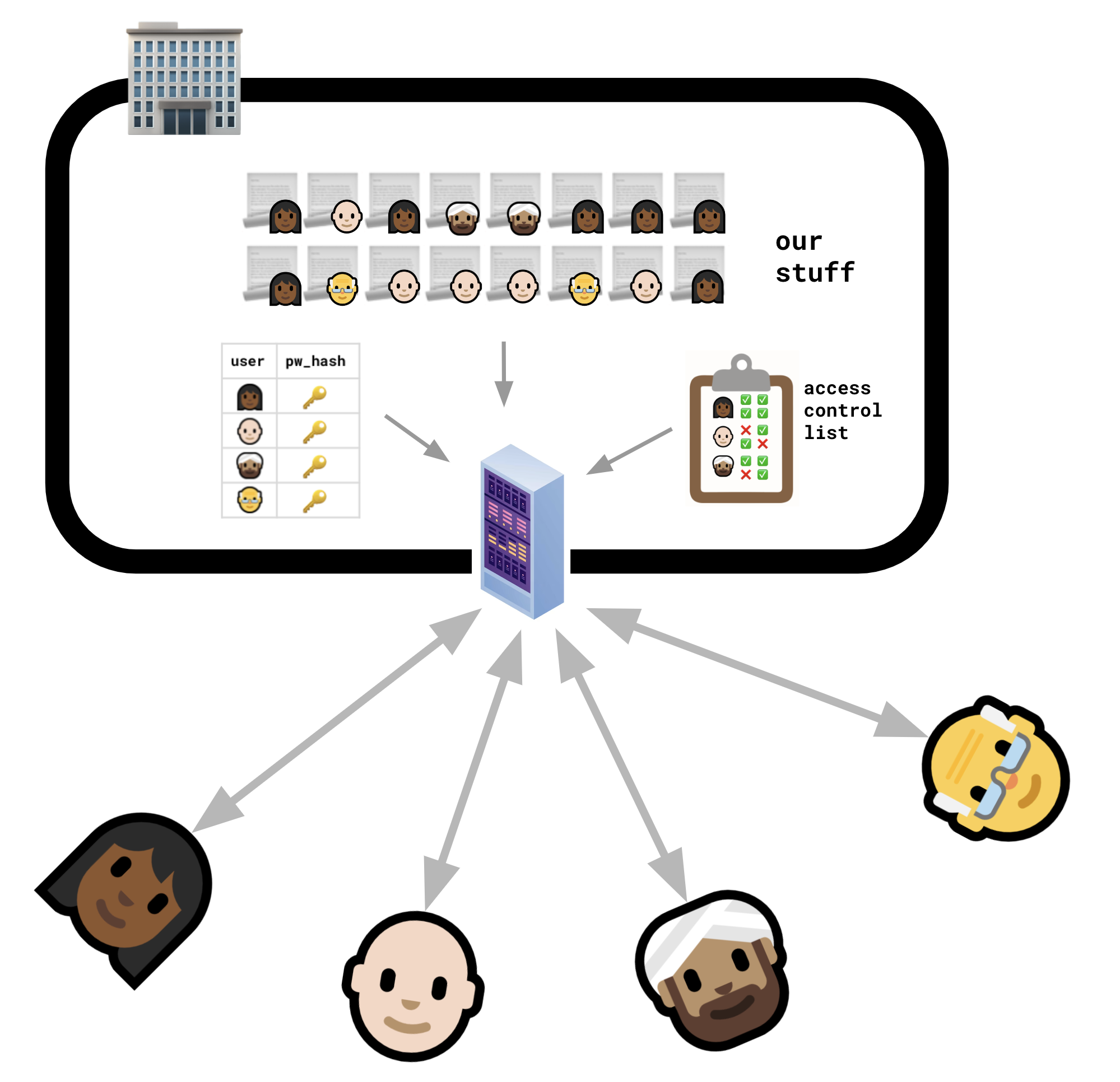

Traditional authentication and authorization ultimately “root out” in a physical piece of hardware, owned and controlled by a company that you’ve decided to trust. The hardware is a server that has your username and password and rules about what you’re allowed to do. It might be controlled by your employer, or a SaaS provider, or a big tech company.

The server is like a guard at the castle gates. If the server doesn’t recognize you, it doesn’t let you in. If the server doesn’t think you should be allowed to have something, it doesn’t give it to you.

Of course, the whole point of local-first software is that we don’t love that arrangement.

But when you take away that central server, you’re losing the thing that all our notions of security are anchored to.

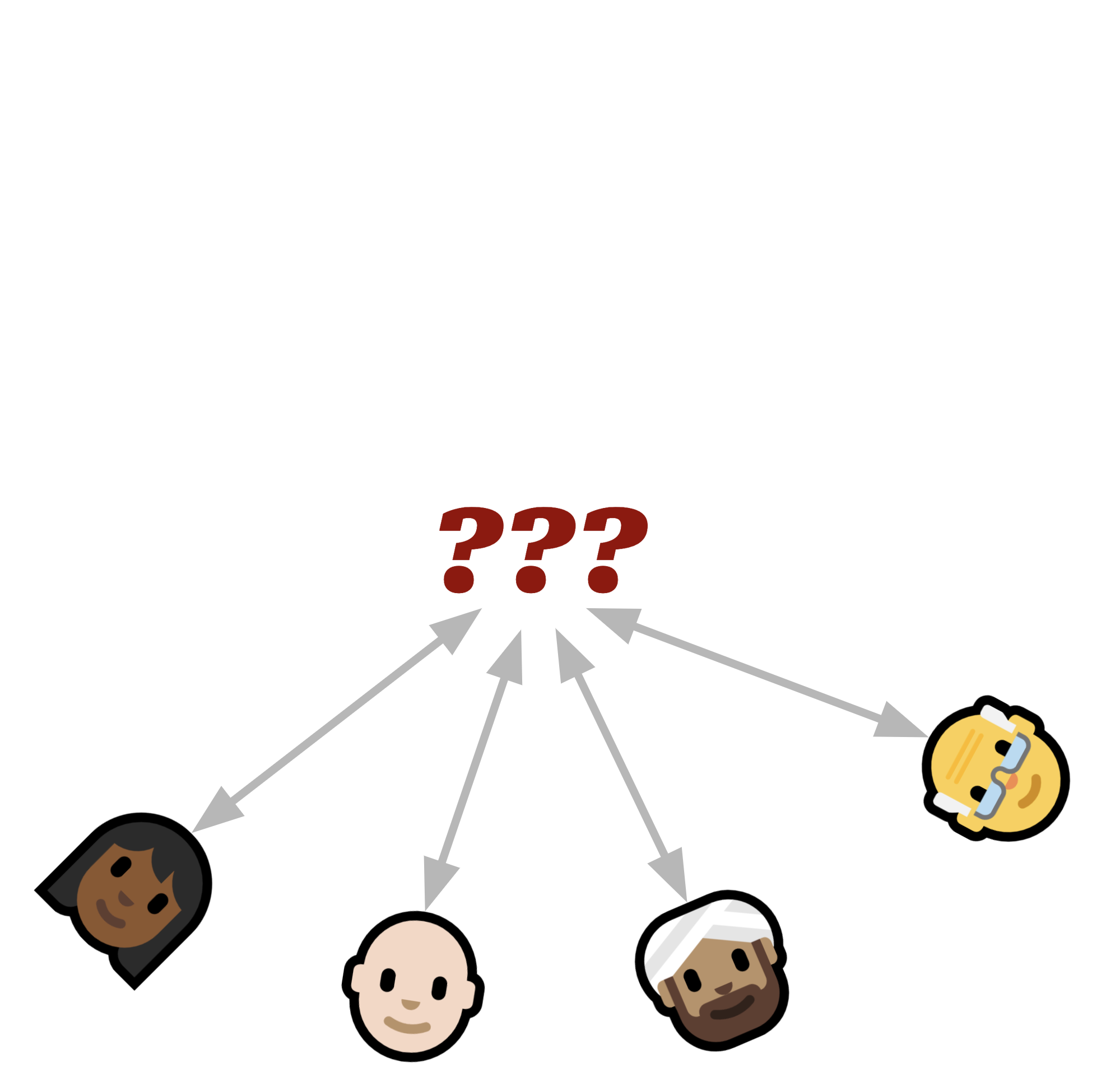

If all you have is clients, who are peers — which means they relate to each other as equals — then how do we know who is who? How do we know who to trust? What do permissions even mean — who exactly is “giving permission”?

“Bootstrapping” has become a word that we associate with something as easy and routine as turning your computer on. But the whole point of bootstrapping as a metaphor is that it’s supposed to be impossible: You can’t lift yourself up by pulling on your bootstraps.

It’s like living in a monarchy and realizing that the king is just another person, and not someone appointed by the gods. Just like countries making that transition from monarchy to democracy, we’re suddenly adrift, and have to come up with completely new arrangements to solve problems that were already solved — even if in a suboptimal way.

Without a server, it feels like we’re having to pull ourselves up by our bootstraps on some fundamental questions:

- What anchors identity? How do we authenticate with a peer device?

- What anchors authority? How do permissions work? Who has the authority to give anyone permission anyway?

The road to localfirst/auth

In 2020, a couple of weeks into the pandemic, I started work on a library that became localfirst/auth.

I had to learn about modern cryptography starting from zero.

I was immediately fascinated by how empowering public-key cryptography is, in the sense that it can put me on an equal footing with even very powerful adversaries.

The movies would lead you to believe that defeating encryption is something a dude in a hoodie can do just by typing really hard.

But in the real world strong cryptography can’t be defeated.* It just can’t.**

Here is legendary cryptologist and mathematician Daniel J. Bernstein writing over a decade ago:

For most cryptographic operations, there exist widely accepted primitives that have been extensively studied, and breaking them is considered computationally infeasible on any existing computer cluster.

The point is you don’t need a lot of money, or computing power, or specialized equipment. Strong encryption doesn’t cost any more than weak encryption. It doesn’t cost any more to use a long encryption key than a short one. It’s just math. It’s just code.

With no servers, what anchors identity?

So anyway I’m sitting down to work on this, and the first thing that makes my head spin is, how do you know you’re talking to?

When we collaborate or communicate online using a traditional cloud-based platform, we can think of the service provider as vouching for us — introducing us to other people and confirming our identity.

If I’m using Slack to talk to you, you know it’s me because Slack says it’s me, and you trust Slack. And maybe Slack knows it’s me because I logged in with Google: Google vouched for me, and Slack trusts Google.

If we don’t want to put our trust in a centralized third party, then who will vouch us?

In a system where everyone’s a peer, then maybe peers could vouch for peers, in some kind of chain of trust.

But that chain has to be anchored somewhere: Maybe Charlie vouches for Bob to Alice, but who vouches for Charlie?

Managing public keys

Ignoring the infinite regress problem for the moment, it seems clear that the solution will be something something public-key cryptography. But to do public-key cryptography, you need to know people’s public keys.

”Crypto is a tool for turning a whole swathe of problems into key management problems. Key management problems are way harder than virtually all cryptographers think.”

— Dr. Lea Kissner

Dr. Kissner was the head of information security at Twitter and I don’t think they meant the above tweet to be encouraging.

But I do find it encouraging. Even granting that key management is a hard problem, the idea that you can collapse lots of different problems into this one problem is exciting, because you just have to focus on solving that thing. If you can succeed at the key management thing — it’s is a big if — but if you can, then you can solve this “whole swathe of problems.”

So how do we learn people’s public keys?

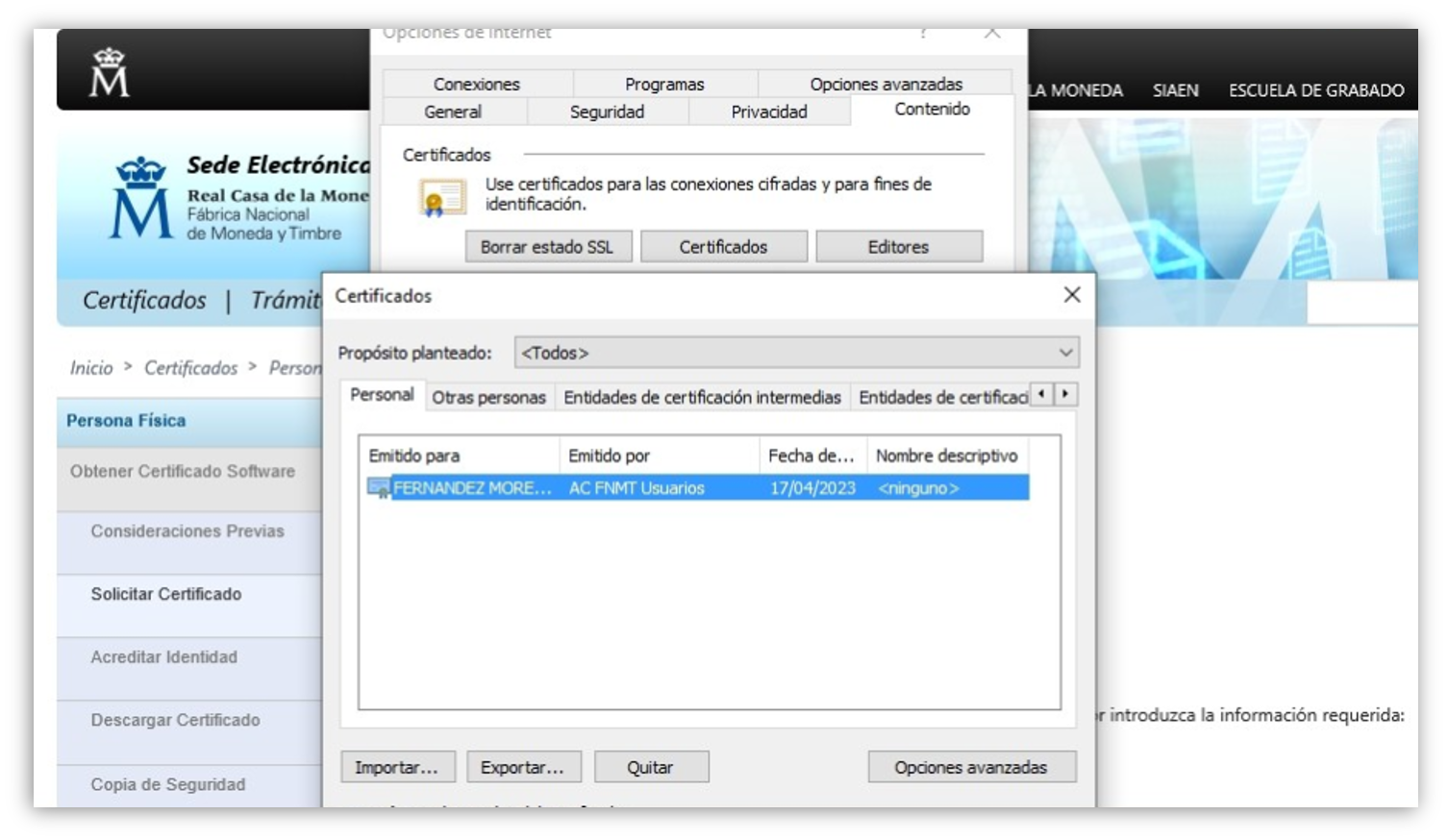

Here in Spain, I can obtain a certificate from the treasury department to install in my browser, which allows me to transparently authenticate to government websites and sign official documents digitally.

The most obvious solution is to have some trusted party keep a directory of people and their public keys, and of course that’s totally a thing that exists.

The certificate authorities (CAs) that make secure https connections possible are an example. Some big companies have their own certificate authorities to anchor their employees’ digital identities. And countries can do the same for residents.

This is what my public PGP key looks like.

Bringing encryption to the nerd community

But we’re trying to do this without depending on centralized services.

If you want a decentralized solution, the alternative seems to be for people to actively share their own keys directly with each other.

This was the approach taken by PGP (“Pretty Good Privacy”) in the 1990s.

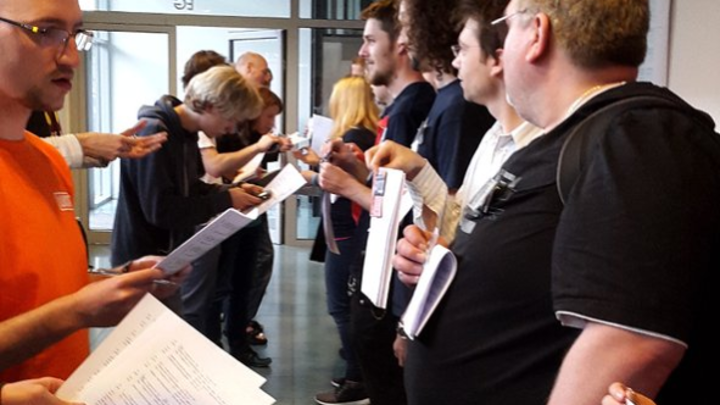

PGP was the first encryption toolset intended for ordinary users. The idea was that people would put their public keys on their websites and in their email signatures. Apparently there were key signing parties where people exchanged and signed each other’s keys.

From the looks of it these parties were lit.🥳

In 2014, Keybase had a better idea: People can just post their public keys in machine-readable form on public sites where they have accounts, like Twitter or GitHub. They called these “social proofs”. Then anyone can look up a person’s public keys by username, and use them to verify their identity.

I’ve used Keybase to “verify myself” by posting machine-readable statements on Twitter, GitHub, and Reddit

Bringing encryption to the masses

The first applications to truly bring end-to-end encryption to the masses were messaging apps. The Signal protocol came out in 2013, and two years later WhatsApp brought it to their entire customer base, and overnight about a billion people were using encrypted messaging. Since then WhatsApp’s user base grew to two billion and then to three billion, making it one of the most widely used communication tools in all of human history.

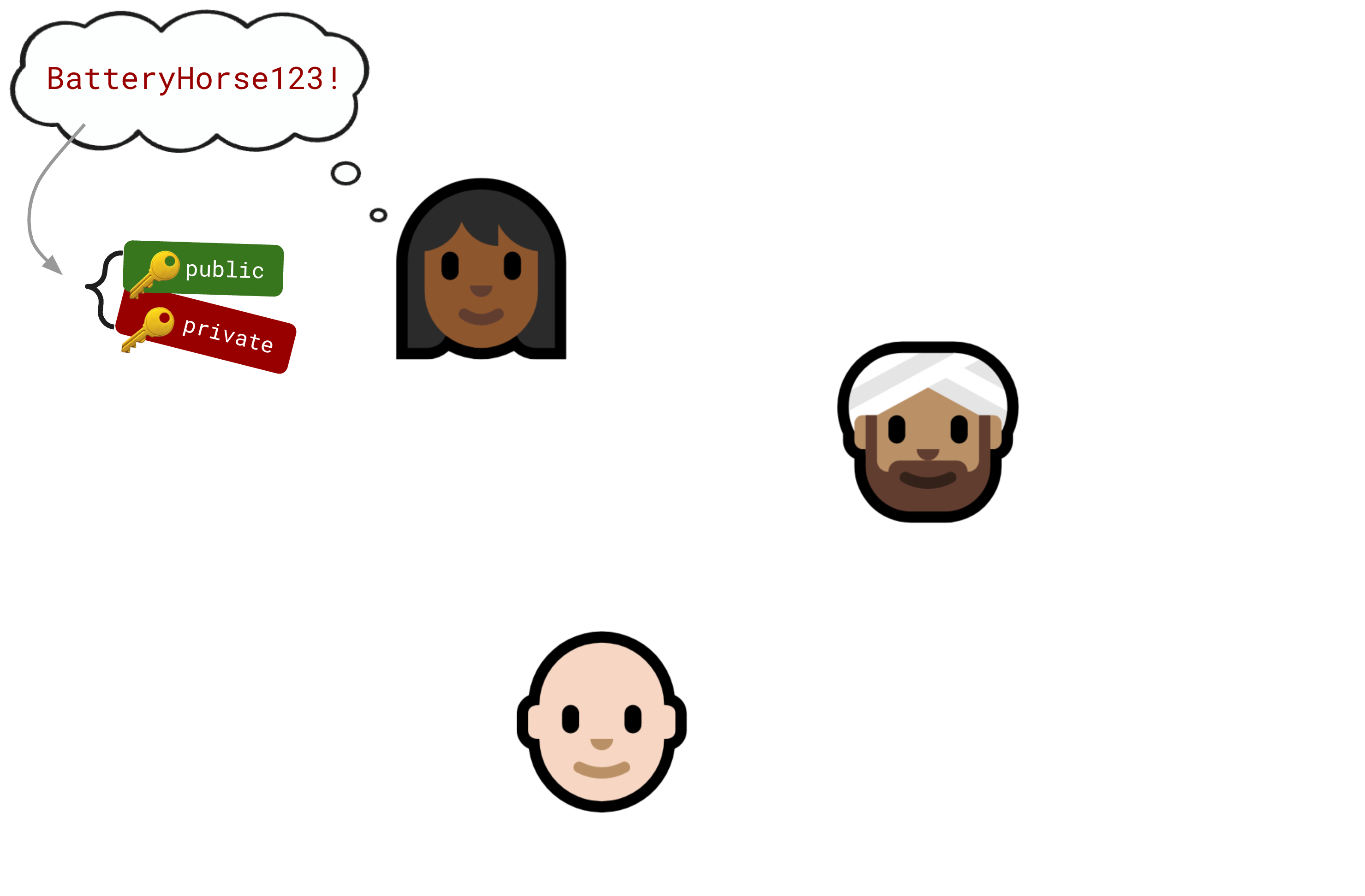

This succeeded because they made encryption transparent to the user. The key insight is that a public key will never be like an email or a telephone number. It’s not a stable component of our digital identity. Wrangling encryption keys is a job for computers, not for people. Devices should just generate keys as needed and we should never see them or have to think about them. Private keys should never leave the device, and public keys should be shared automatically with other devices.

|

|

|

|---|---|---|

|

|

|

| what is the scope of a key? | one human identity | one app on one device |

| who manages keys? | humans | computers |

| when do we see our keys? | often | never |

| how are keys shared? | manually, human to human | automatically, device to device |

We’re agreed, then, that device-to-device sharing of keys is better than key signing parties. But Alice’s device still needs to know that Bob’s device is really Bob’s device, and not Eve’s device pretending to be Bob’s device.

TOFU and other meat substitutes

TOFU considered squishy. Image: Yoav Aziz

WhatsApp and Signal take an approach called “Trust On First Use”, or TOFU. Here’s how that works:

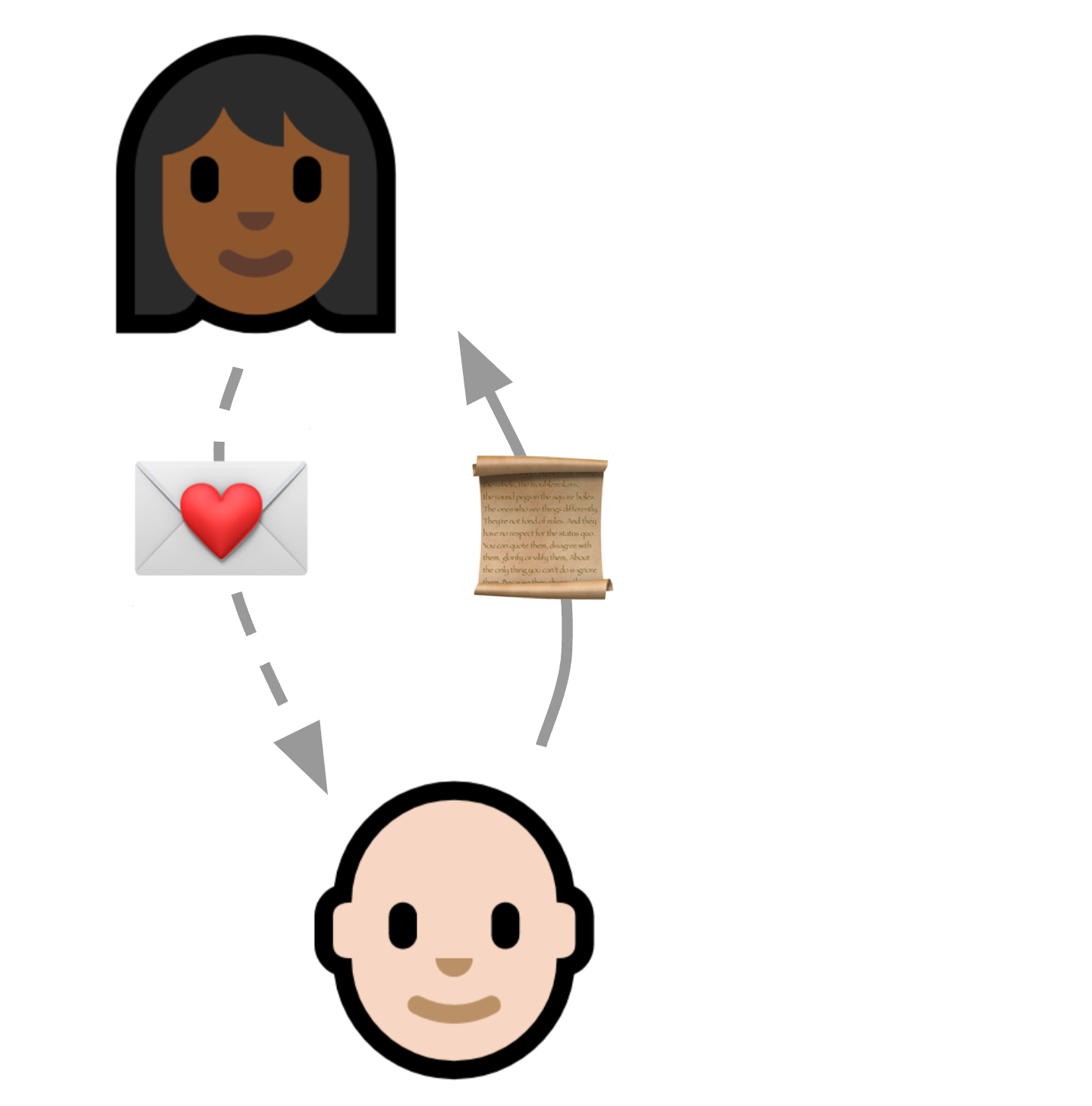

Here’s the idea behind TOFU:

- When Alice invites Bob to communicate with her, she just trusts that the first person to show up saying they’re Bob is, in fact, Bob.

- Bob and Alice exchange their public keys on that initial connection.

- From that point on, and on subsequent connections, they can use those keys to authenticate and encrypt messages to each other.

There’s a little risk there, they’re both taking a little leap of faith, but it’s a one-time thing, it’s probably fine.

That seems reasonable. The problem, though, is that the “first use” bit is kind of misleading, because in practice TOFU doesn’t happen just once.

Remember, the keys we care about are device-level keys, not user-level keys. So what happens when Bob gets a new phone, or Alice reinstalls the software on her laptop?

What happens is an “account reset”, and at that point we’re back to TOFU — except that now, the attack scenarios are a bit more plausible: an impostor might have engineered the reset, and it would be more feasible to exploit it.

When a user has an account reset, the messaging apps suggest to everyone they communicate with that they compare “security codes” or “safety numbers”. Most users don’t do this — I never have, I can’t imagine that people less technical than me do this.

And anyway this is bad enough for a single pairwise connection; where this all really falls apart is in a large group, where the number of pairwise connections scales exponentially with the size of the group.

PAKEs

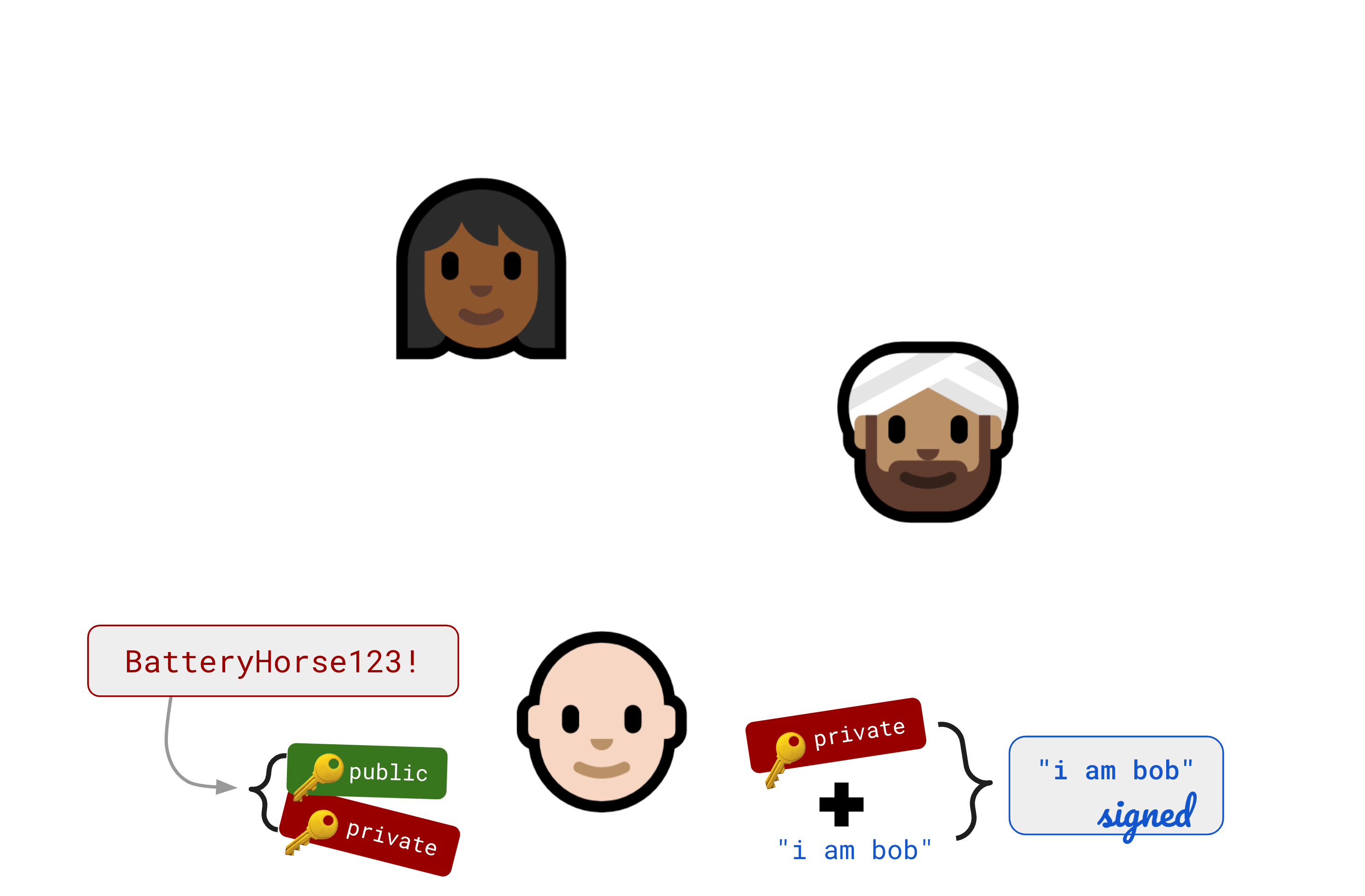

With a balanced PAKE, both parties need to know the password.

So when you want to connect with someone and don’t know their public key, is there an alternative to TOFU for securing that initial connection?

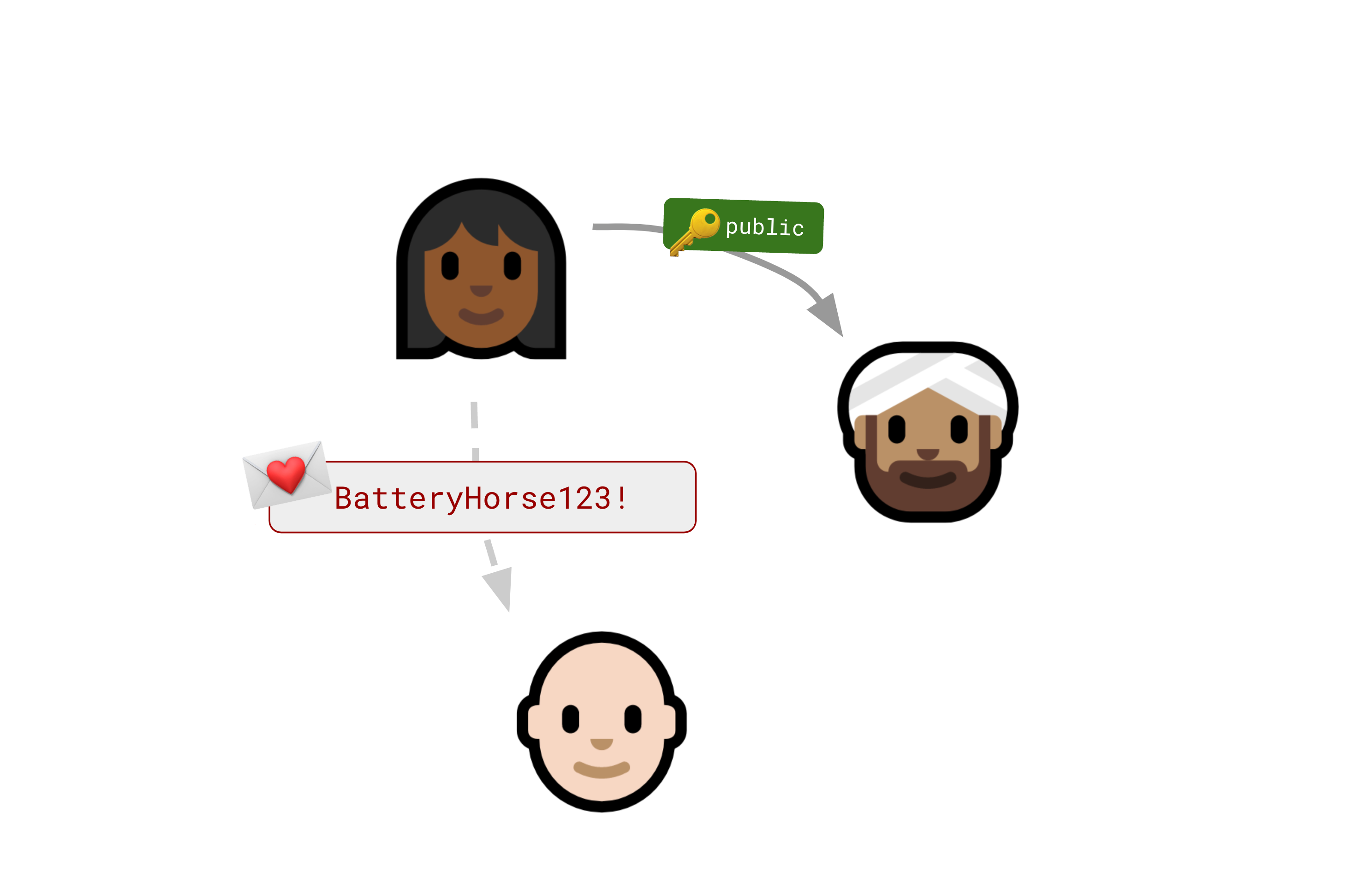

The most promising approach is to first share a single-use password out of band (in person, or via a channel you already trust). There is whole a family of cryptographic protocols that support this, called PAKEs (for “password-authenticated key exchange”).

We’re saying “password” but it’s generally presented as an invitation code.

In the most common forms, both parties need to know the secret code; this is called a balanced PAKE.

The downside is that the inviter and invitee need to connect directly to validate the invitation.

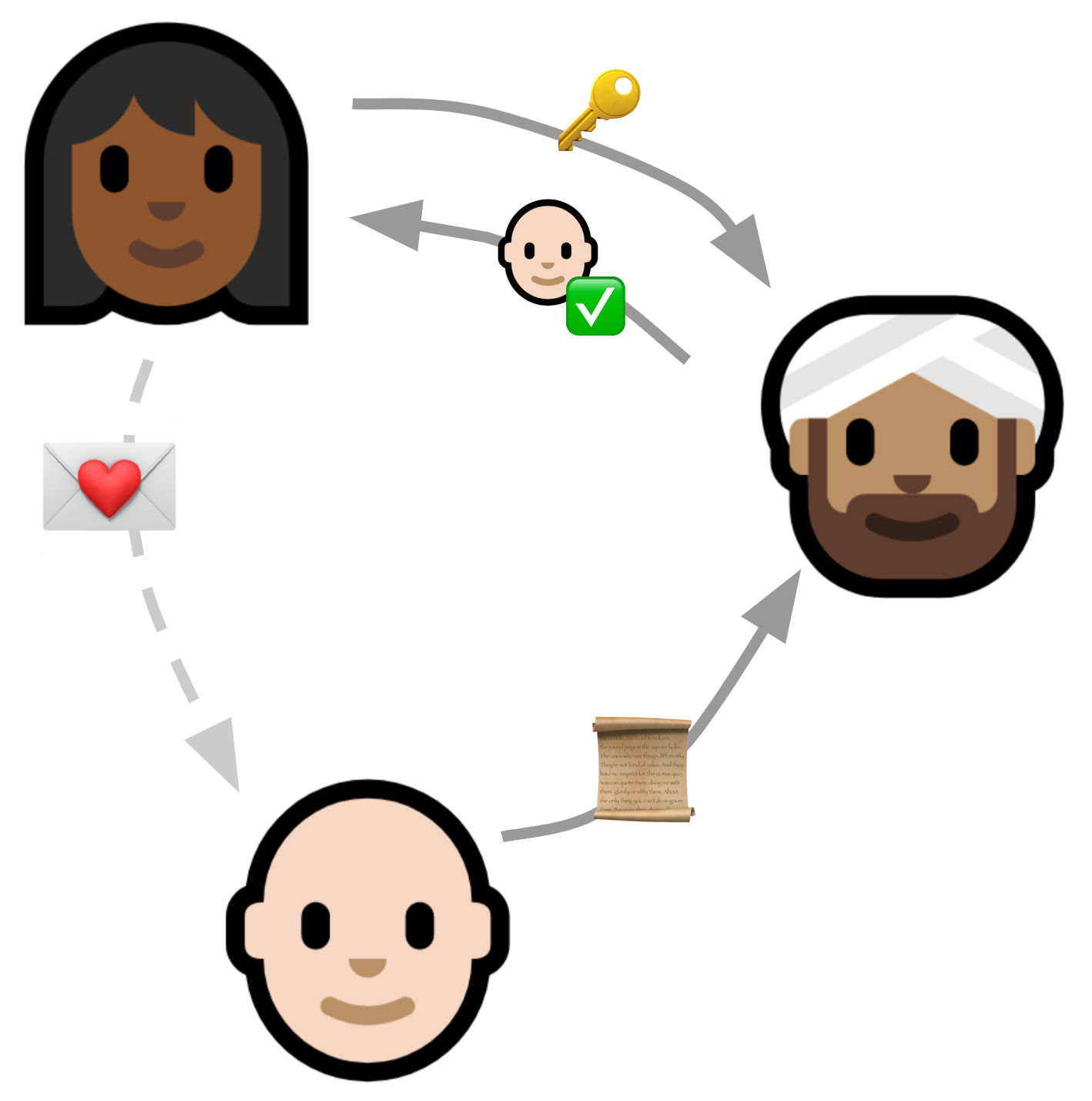

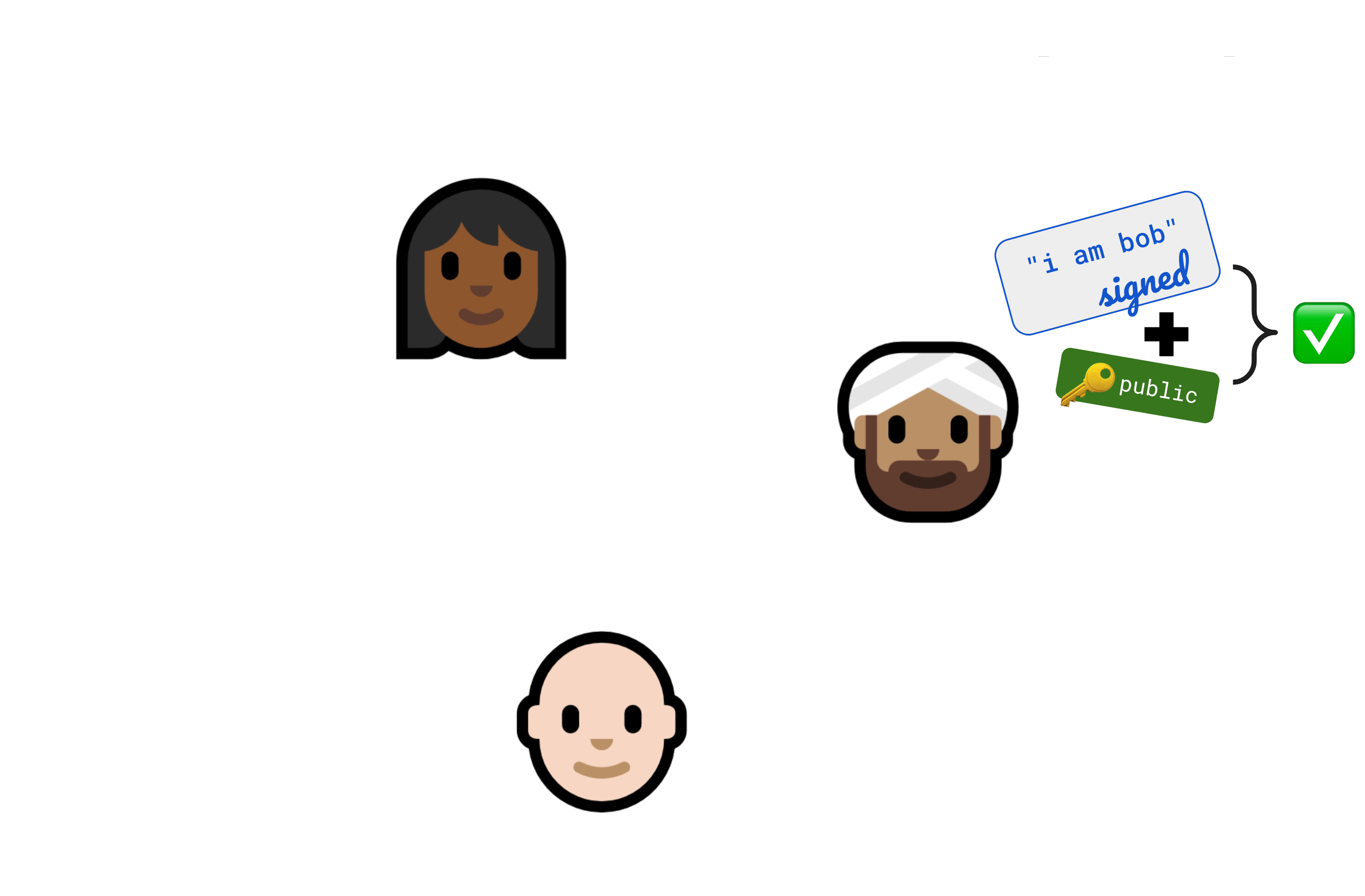

With an asymmetric PAKE, a third party can verify the password without knowing it.

With an asymmetric PAKE (asymmetric because the verifier doesn’t need to know the password) a third party can validate an invitation code without knowing it in advance or asking for it.

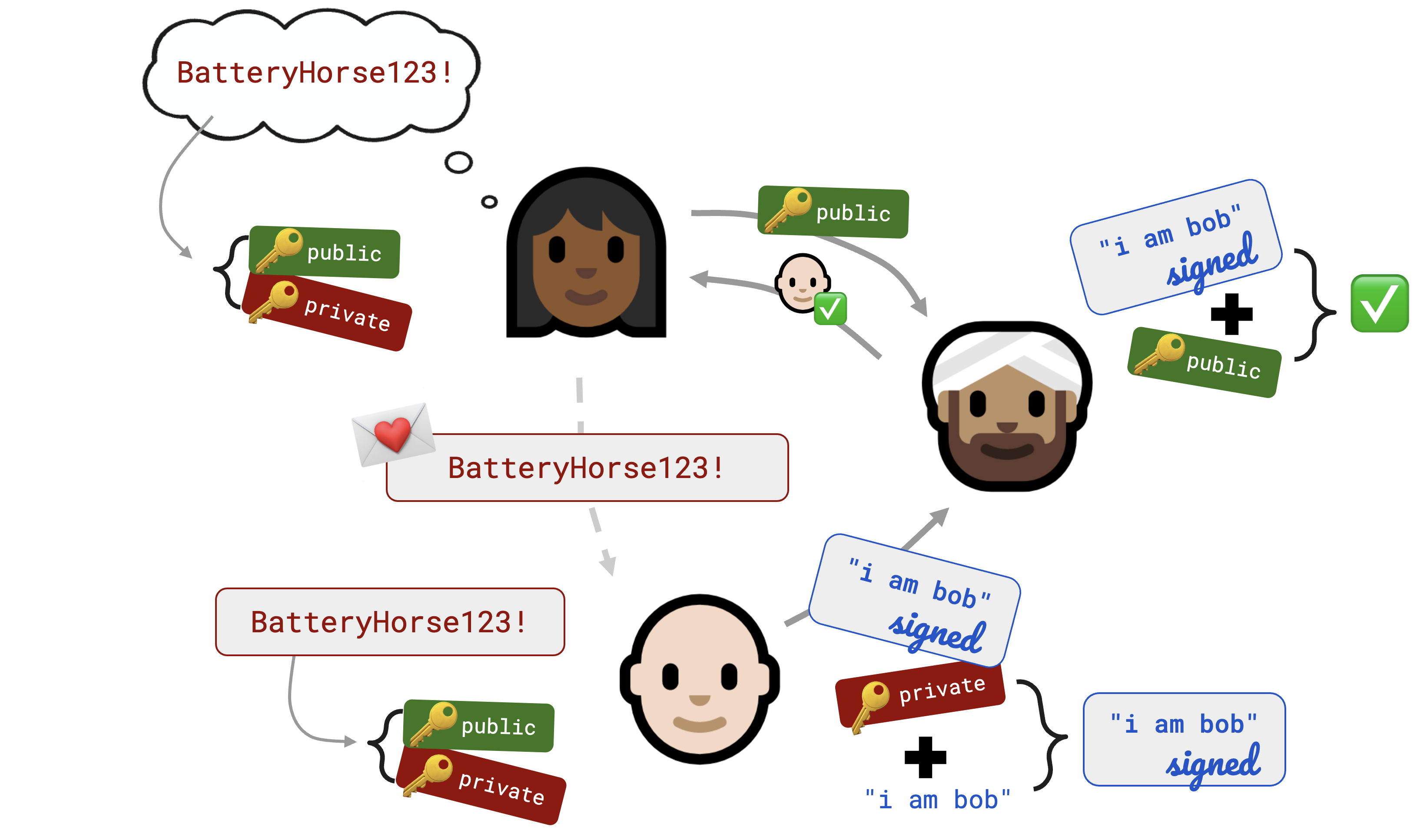

The Seitan token exchange

This brings us back to Keybase. In 2017 or so, they decided to try to monetize by building a Slack-but-encrypted thing for enterprise customers. They quickly realized that even if the global nerd population thought the idea of putting your public key on Twitter was really cool, Jim in Accounts Payable is probably just not going to do that. Sorry, it’s just not going to happen.

So they needed a way to bootstrap that initial key exchange, and they proposed something called a Seitan Token Exchange (presumably “seitan” because it’s not as soft as tofu).

This is the approach I stole for localfirst/auth. At the time I didn’t know a PAKE from a hole in the ground, but as it turns out this is essentially an asymmetric PAKE.

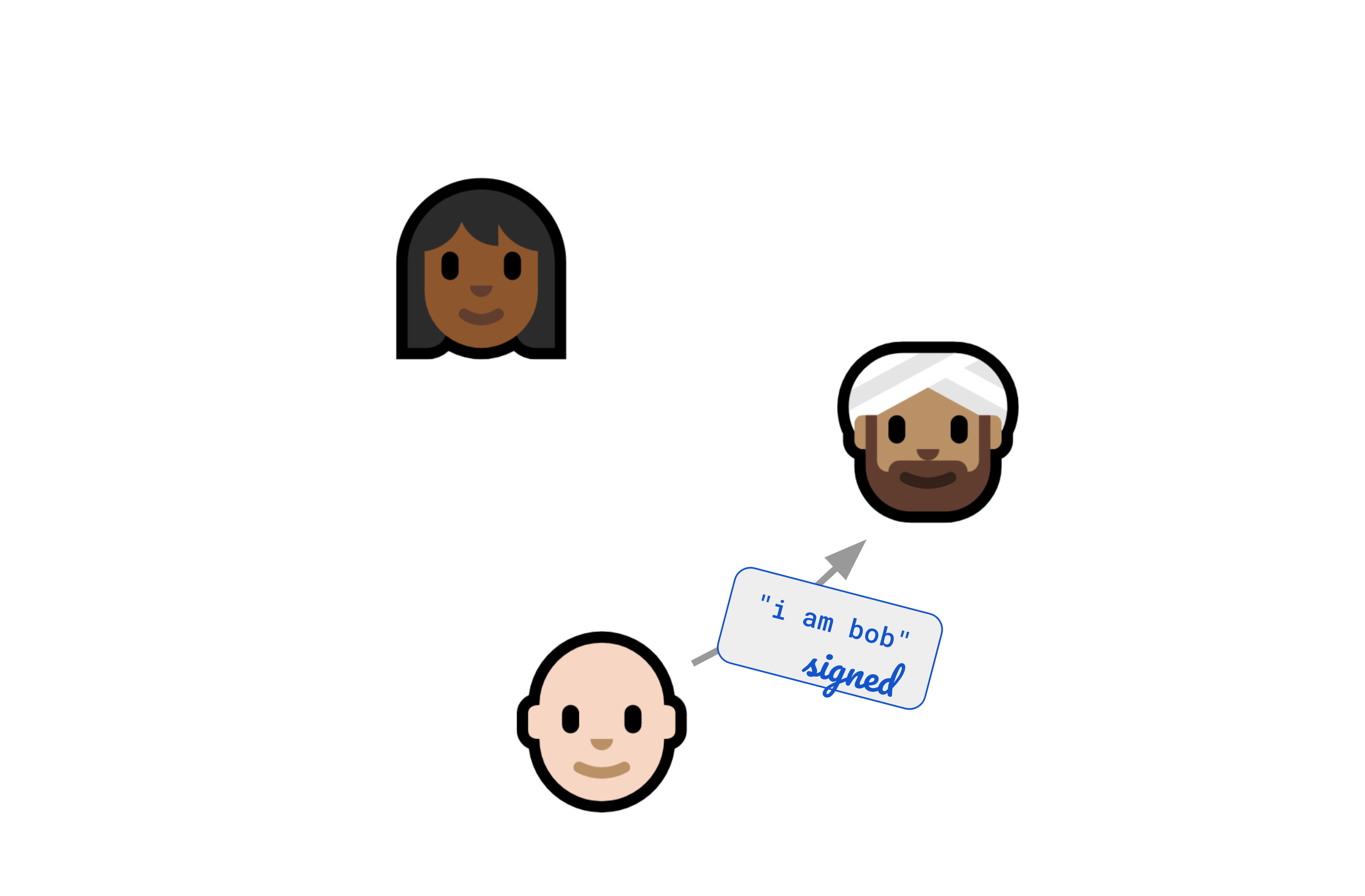

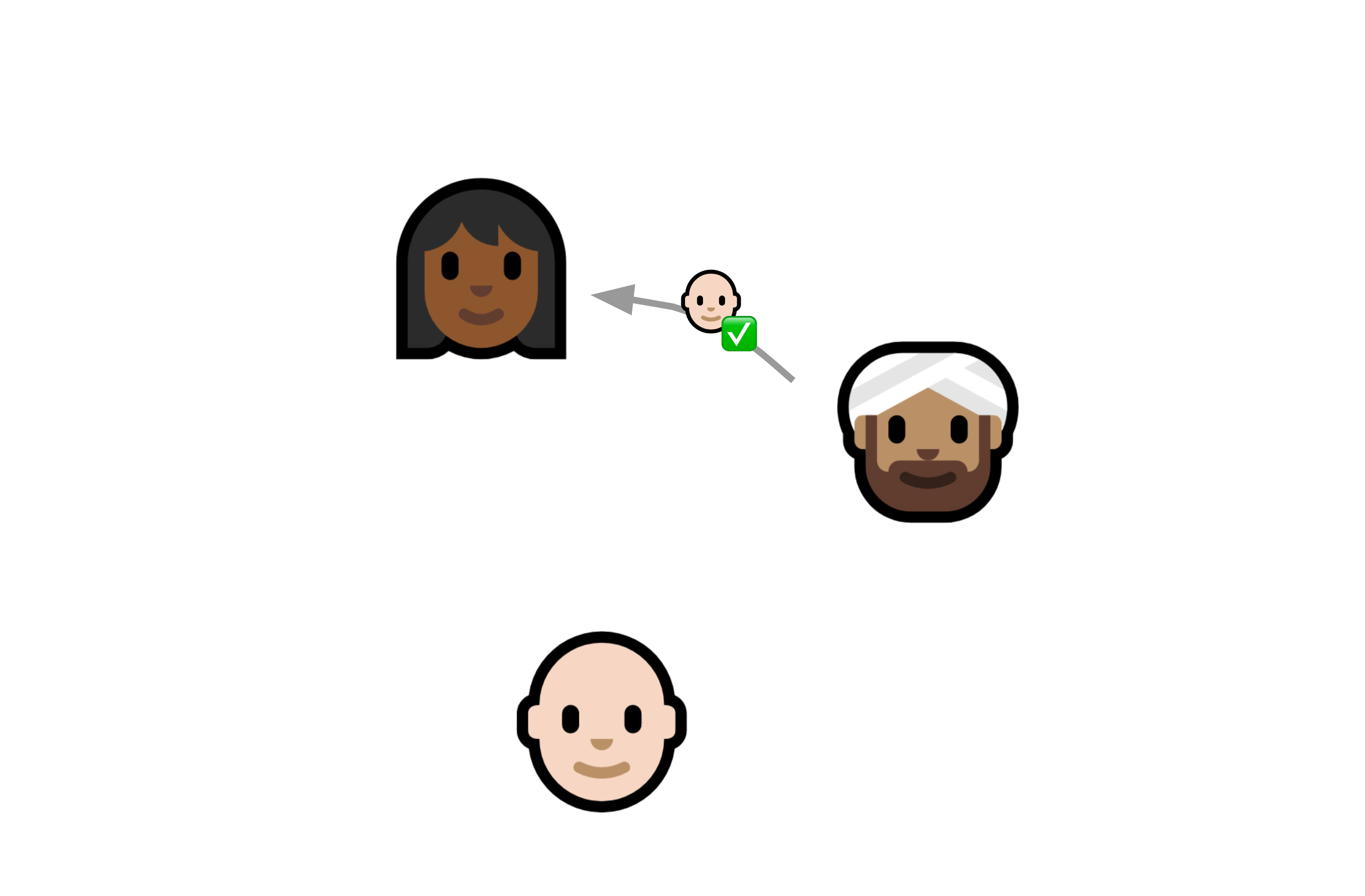

This is how it works:

Identity is rooted in relationships

We’ve been talking about “identity”; but the beauty of this approach is that identity doesn’t need to mean “who you are in the world”, in the sense of linking to your real name or email or whatever — it can just mean “who you are to me“.

Rather than linking identity to a name or an email address, we link it to a relationship.

For example, in Ink & Switch’s Backchannel project one of the motivating use cases was connecting an anonymous source to a journalist. With a system like this the source doesn’t have to have a name at all; the only thing that matters is that they’re able to interact once.

Trust and identity are ultimately rooted in real-world relationships and interactions between human beings. I know you because we interact, whether that’s face-to-face or online. That’s what it means to “know” someone. And we can use that interaction to bootstrap cryptographically secure connections going forward. And if you have those secure connections with other people and I trust you, I can benefit from your connections, and so on, forming a network of trust relationships.

That helps us clear up our thinking about identity:

Q. What anchors identity?

A. Real-world human interactions and relationships.

This feels correct to me: It’s natural, human-scale, and rooted in social reality.

The server-centric model roots our identity in getting distant corporations to vouch for us.

- Are you really Herb?

- Yes — look, Google vouches for me.

With this model, instead can we vouch for ourselves:

- Are you really Herb?

- Yes — remember we talked earlier.

And we can vouch for each other:

- I have a friend named Herb and this is how you’ll know it’s him.

Without servers, what anchors authority?

Is it possible to have a secure, distributed access control list?

So now I have a way of knowing it’s you. But how do I know if you’re on my team? How do I know if I should share this document with you, or if you should be able to edit it?

On a server this information is often recorded in some kind of access control list (ACL). So maybe we need that, but distributed — everybody needs a copy of the same list. How would that work?

My first thought was that we could just put this information in an Automerge document and replicate it across everyone’s devices that way. But we can’t just let anyone edit this document at will. It should be obvious if anyone changes the permissions, and we need to know who changed the permissions, and if they … had permission to do so? Do we need an ACL for the ACL?

Let’s ignore that rabbit hole of infinite regress for the moment and focus on the mechanics. We need a way of replicating a document that is:

✅ tamper-evident: it shouldn’t be possible for someone to surreptitiously modify the list

✅ eventually consistent: everybody should have the same list

✅ authenticated: we need to know who has written changes to the list

So let’s look at some options for data structures.

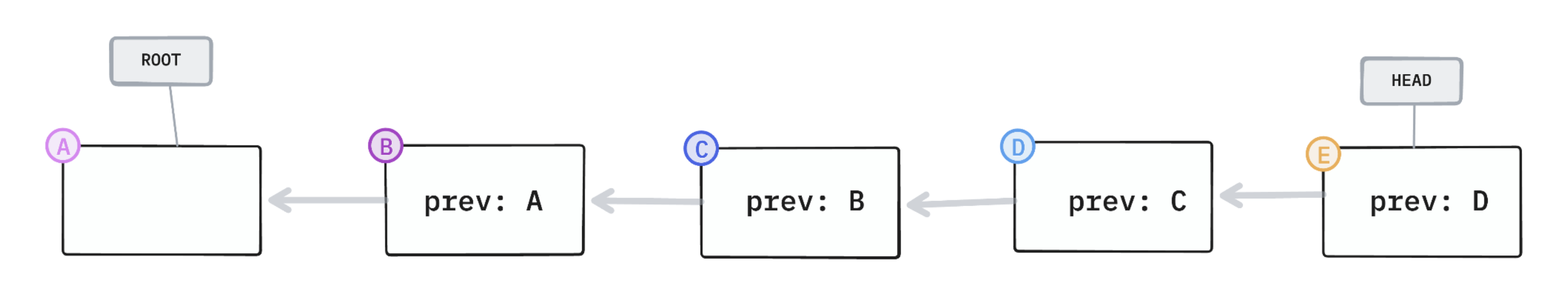

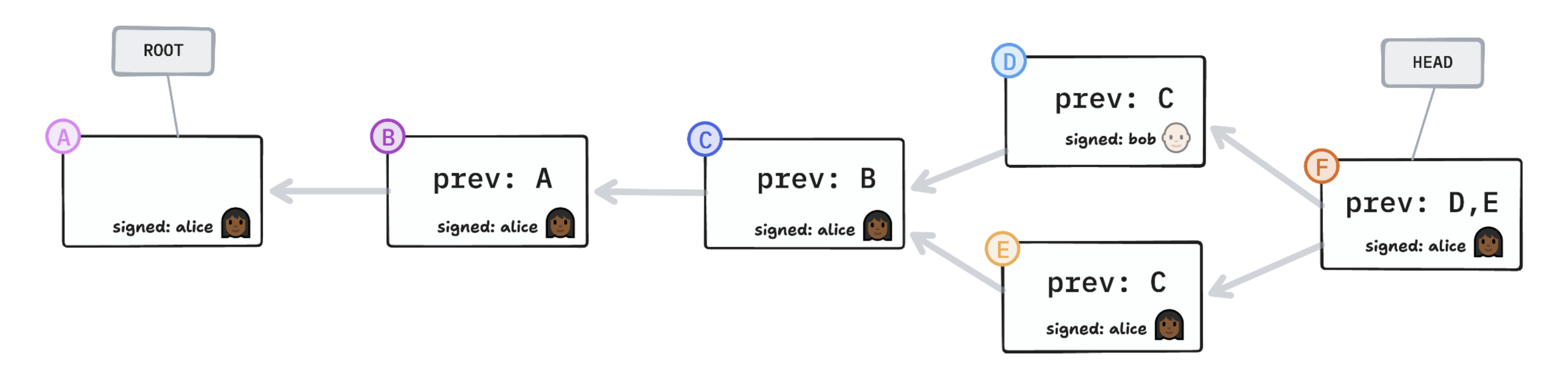

When we say “tamper-resistant” the first thing you might think of is a hash chain (or blockchain), where each link contains a cryptographic hash of the previous link, so it’s impossible to modify one link or change the order without breaking the chain.

hash chain

✅ tamper-evident

The problem is that this chain needs to be linear. Replicating it while ensuring consistency requires enforcing a strict ordering; and cryptocurrency blockchains are famously … not optimized for efficiency.

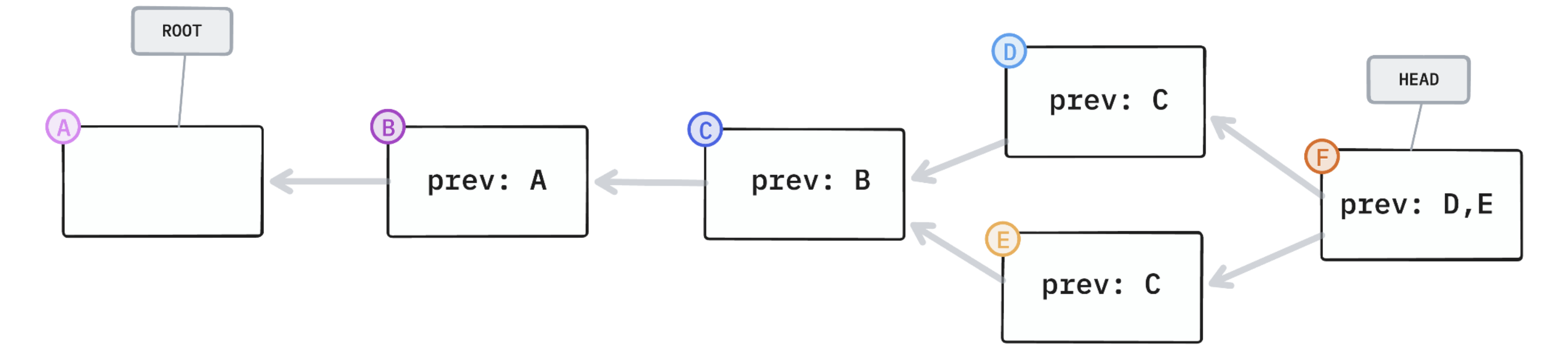

To accomplish this without burning enough fossil fuels to power multiple small countries, we could use a hash graph. A hash graph is a hash chain plus branching. A CRDT like Automerge combines a hash graph with automatic conflict resolution logic to support replication with eventual consistency. Git works the same way but with manual conflict resolution.

hash graph

✅ tamper-evident

✅ eventually consistent

So far so good. But we still need a way of verifying who made which changes to our distributed access control list.

A signature graph is a hash graph where the individual operations are signed by the author. This gives us all three things.

signature graph

✅ tamper-evident

✅ eventually consistent

✅ authenticated

If you look at the work of anyone who’s trying to tackle this problem, you’re going to see some kind of signature graph: the Matrix protocol, DXOS’s HALO, Fission’s UCANs, etc. It’s a case of convergent evolution — we’ve all more or less independently landed on this data structure.

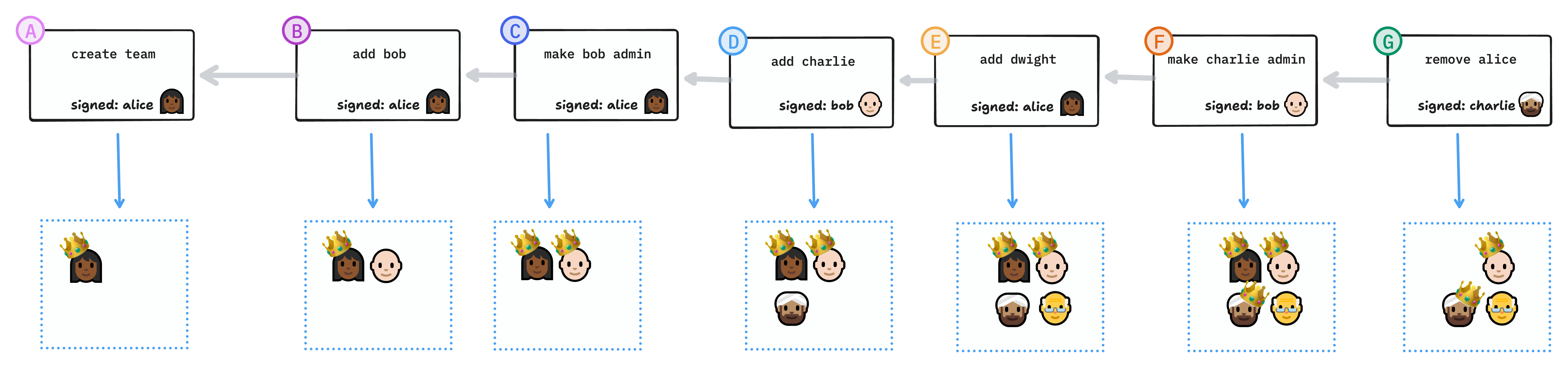

A group membership CRDT

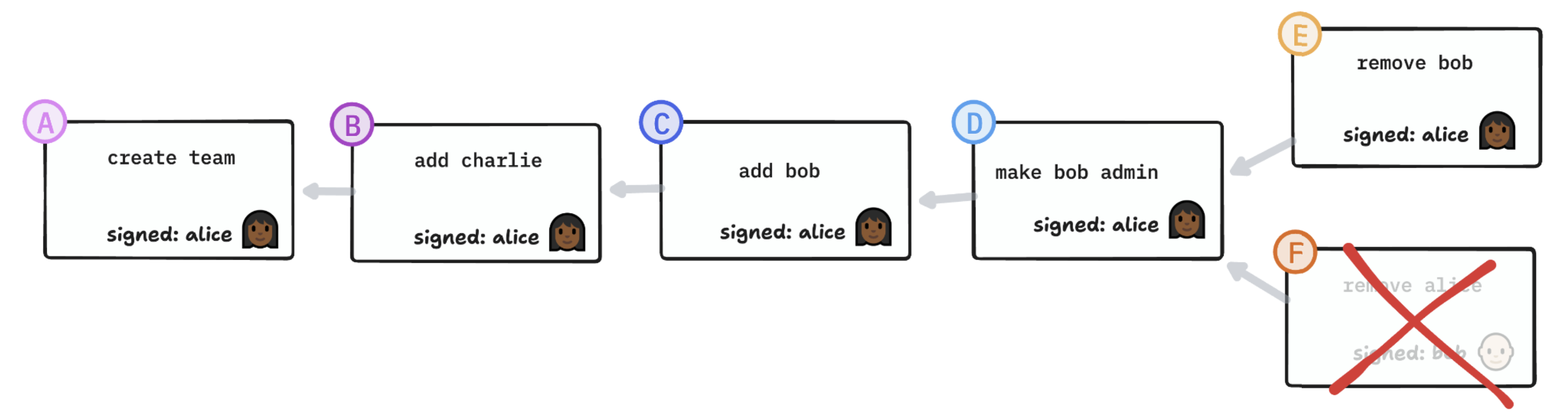

The way this works, concretely, is that there’s a series of operations on the group:

-

The first link, the root of the chain, marks the creation of the group and by definition adds the founder to the group as an admin. It also includes the founder’s public keys.

-

Each subsequent link represents an administrative action such as inviting a member, removing a member, promoting a member to admin (or another role), or demoting a member.

-

Each link contains a cryptographic hash of the preceding link, so the order of the links cannot be altered.

-

Each link contains a digital signature of its content by the member making the change, so that its authenticity can be independently verified, and so that the link body cannot be tampered with.

When I found a group, I am the only member and the only authority. I can decide to add members, and I can decide to delegate authority to them as well. Another member with admin authority could even remove me from the group.

This is a key point that we’ll come back to: In a group, authority is rooted in the act of creating the group.

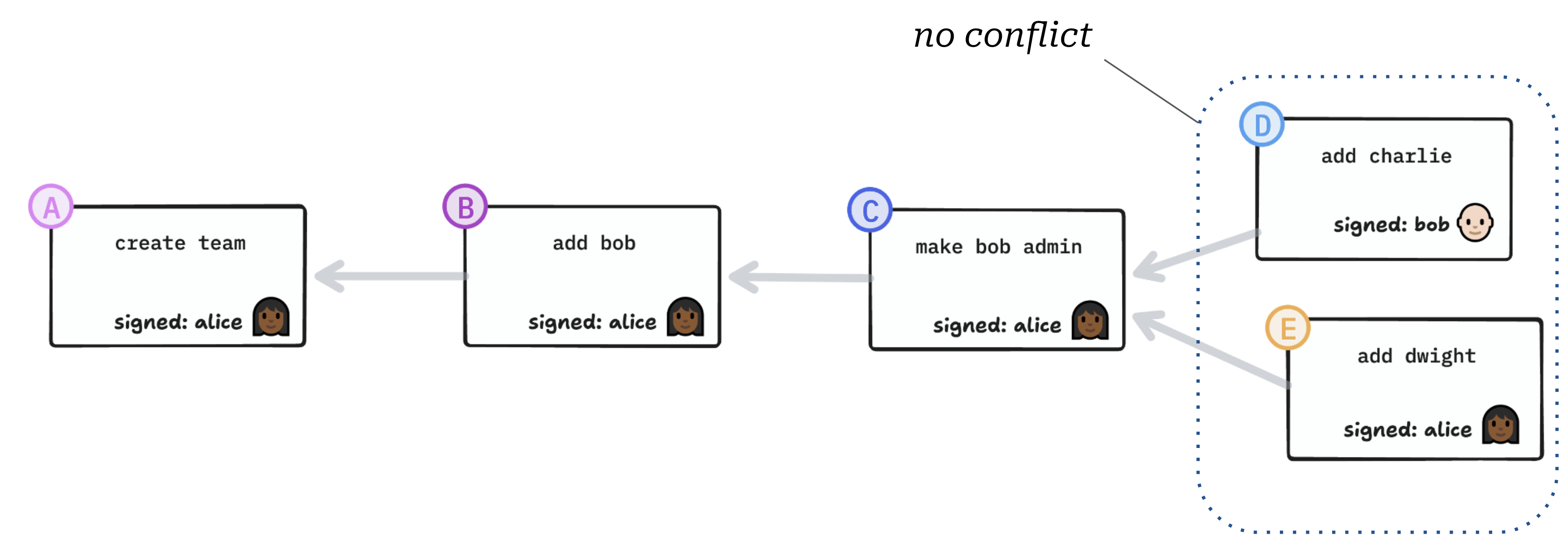

Detecting and resolving conflicts

To be replicable, this data structure needs to be a CRDT. However, you can’t model group permissions using a JSON CRDT like Automerge. I’ll explain why.

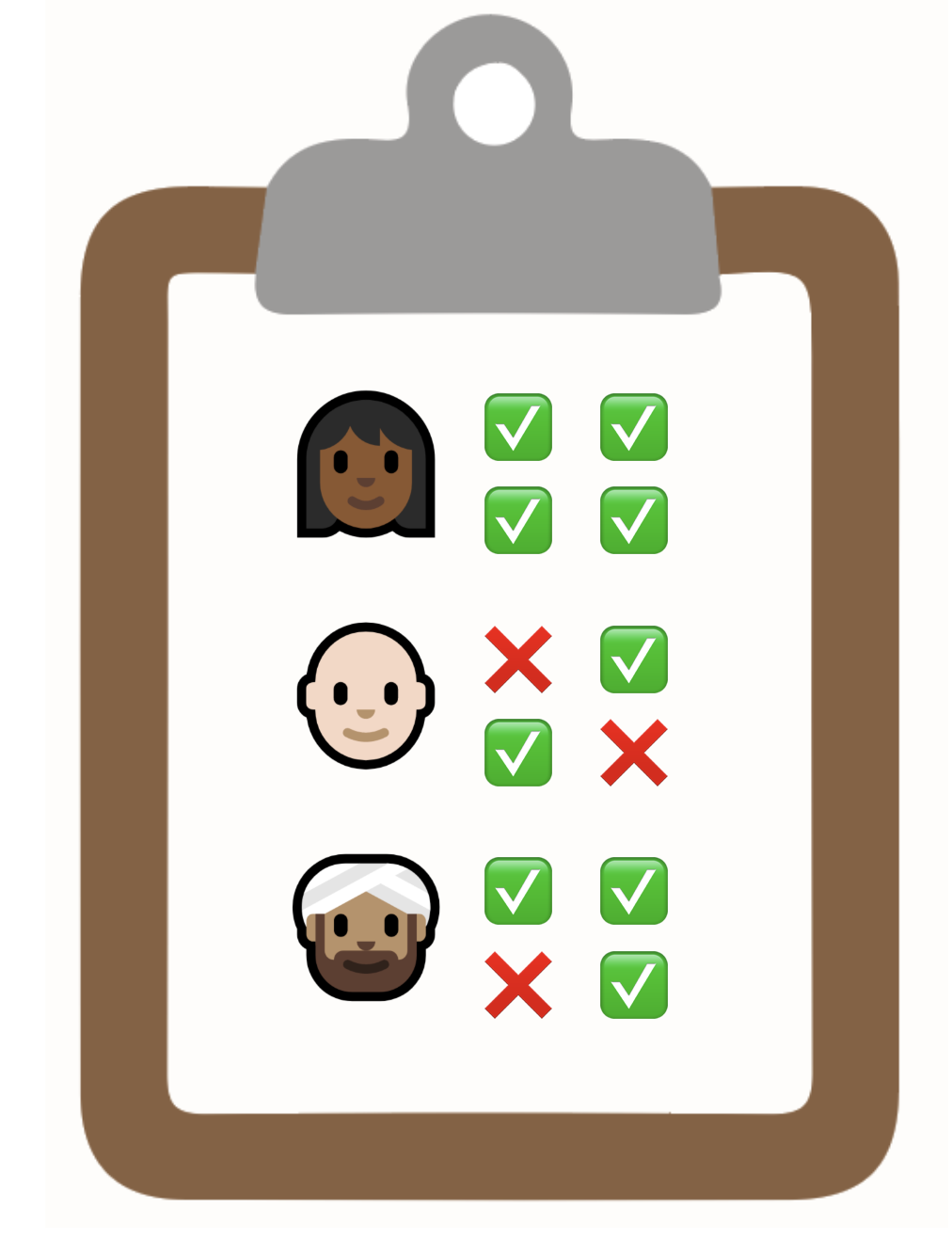

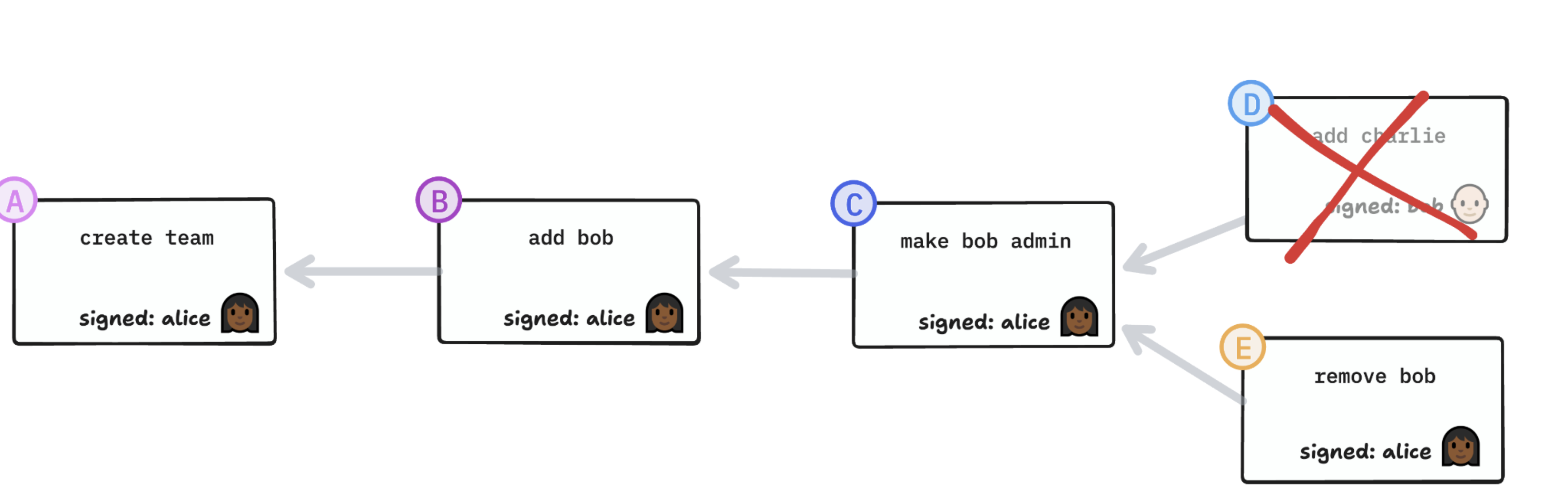

Here we have two concurrent actions, but there’s no conflict, and we can resolve this by picking one or the other in an arbitrary but deterministic order. So far, nothing a generic CRDT couldn’t handle.

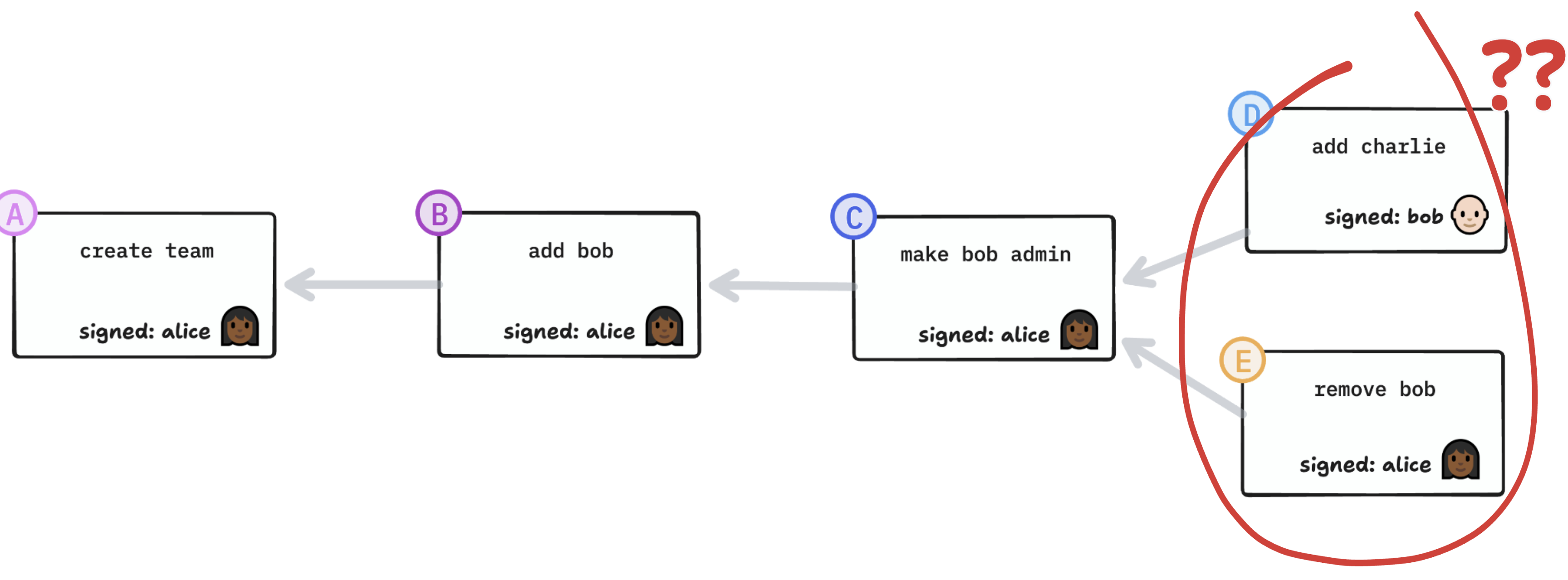

What if someone takes actions while concurrently being removed? For example, what if Bob adds Charlie to the group and concurrently, Alice removes Bob from the group? Now we have a conflict.

Q. Is Charlie in the group or not?

It’s important to resolve this correctly, or else Bob could circumvent his removal by writing a concurrent operation adding proxy-Bob to the group.

A: No. Removals always take precedence. We resolve that conflict by prioritizing removals. A generic CRDT like Automerge won’t be able to resolve this conflict in a principled way; it won’t even see it as a conflict. So this has to be implemented as a custom CRDT engine.

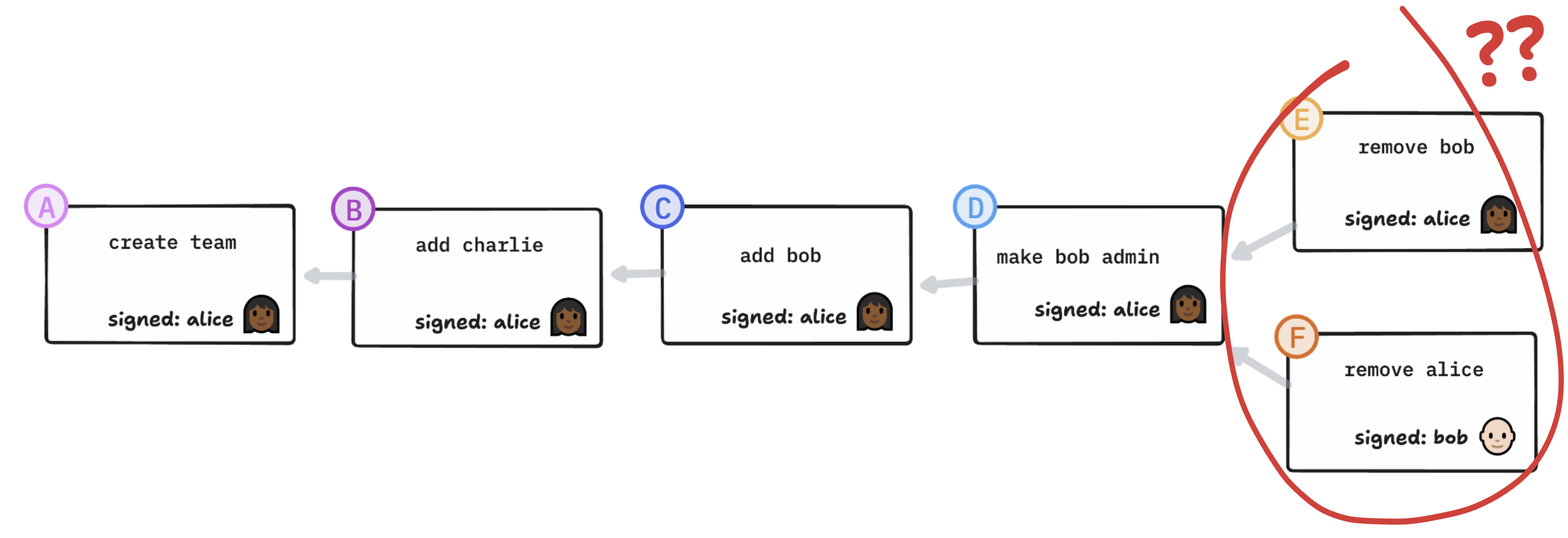

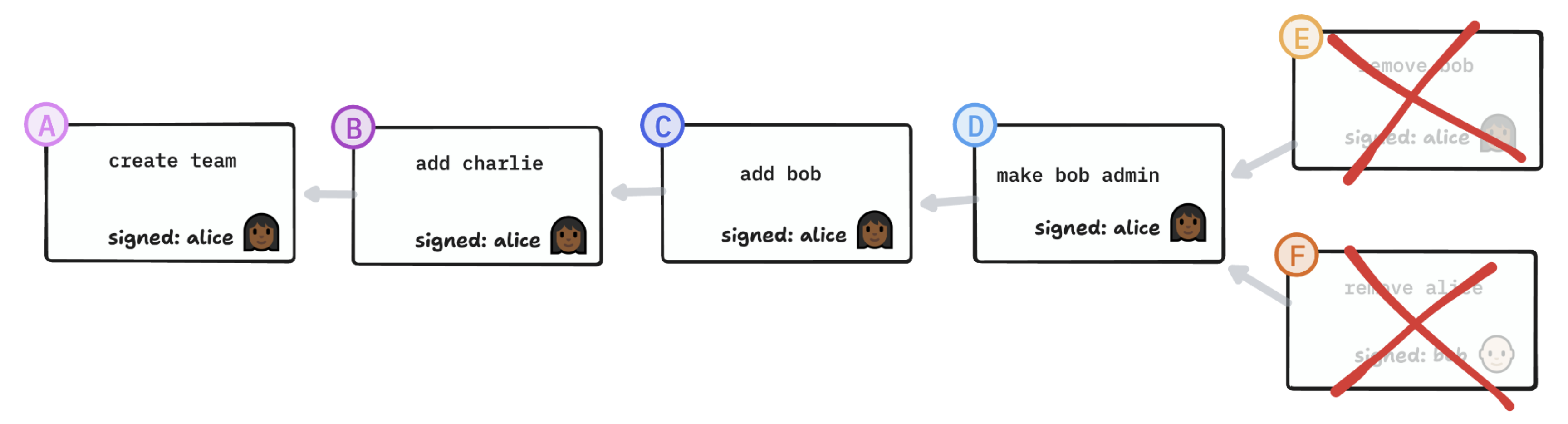

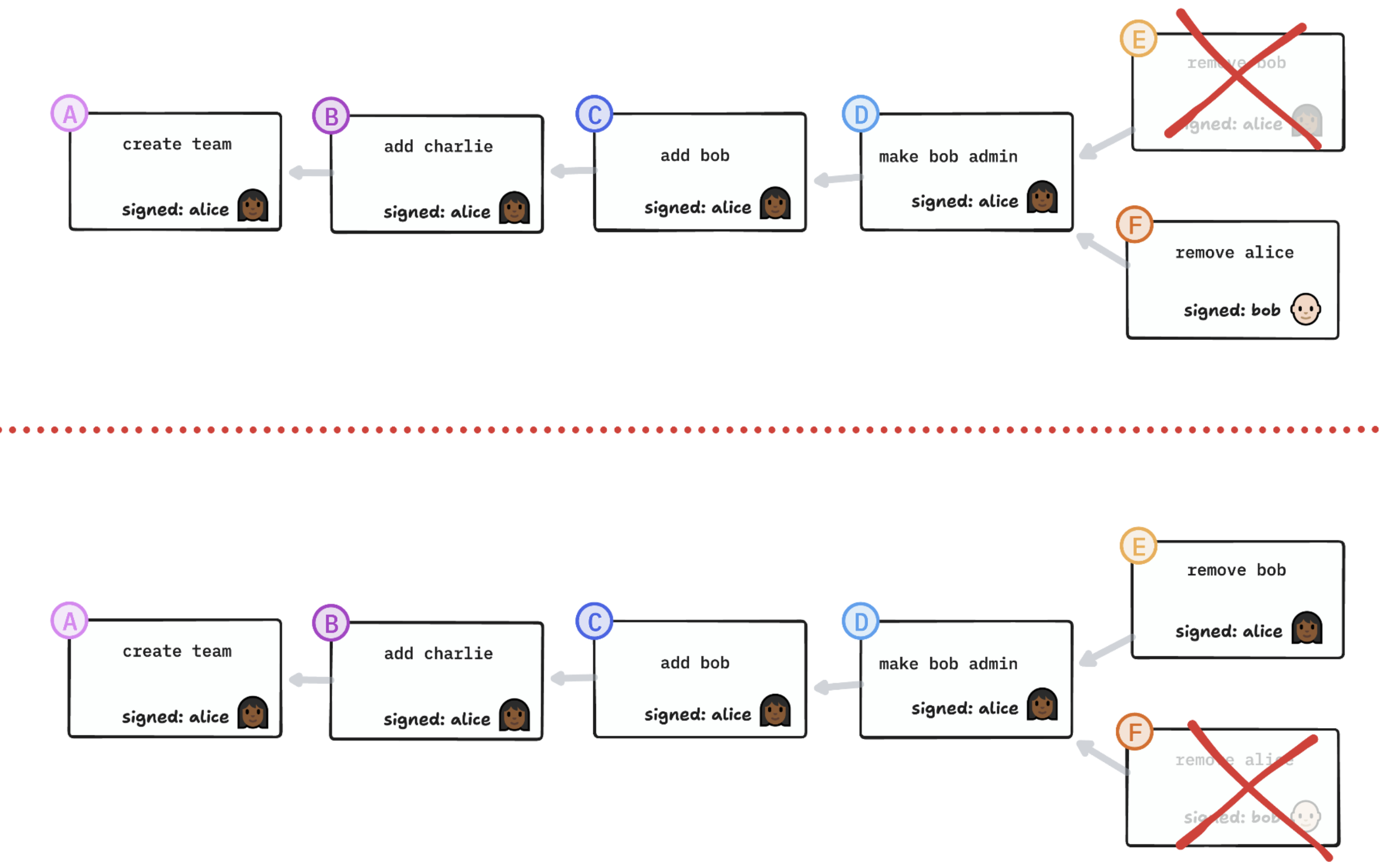

Mutual removals

What if two admins remove each other concurrently? (Or more generally, what if there’s a cycle of concurrent removals?)

As a scenario involving two legitimate members of the group, this is far-fetched and unlikely to happen. A more realistic and worrying scenario might be, for example, a compromised and revoked device trying to undo its revocation.

Option 1: Remove both The logical conclusion of “removals take precedence” is to remove both.

However, this seems unlikely to be the correct way of resolving the issue. It leaves Charlie orphaned in a group without admins and creates an opening for a denial-of-service attack, where a rogue device blows up the group rather than allowing itself to be removed.

Option 2: Fork the group Another approach is to “fork the world” into two parallel versions of the same group: one where Alice stays and one where Bob stays. Then each member decides which world they want to live in. This reflects the reality of a decentralized system.

I suspect that in practice it’d be hard to communicate what’s going on to most users, and it would just be confusing and upsetting.

I call option 2 the “Papal Schism” approach, in honor of the time when there were two popes and all of Catholic Christendom had to decide which one to follow.

Option 3: Keep the senior member The solution I settled on is to let seniority be a proxy for who is more likely to be in the right. In this case, Alice has been in the group longer than Bob, so she stays.

Authority is rooted in authorship

The key insight for me was that the root node of a signature graph gives you an anchor for authority. If I create a group, by definition I get to decide who’s in it.

At the moment of creation, Alice is the absolute dictator of a group with no other members: Alice decides who to add to the group. Alice decides if she wants to delegate her authority to other members. She can make everyone else an admin too.

This delegates the right to remove anyone, including herself, from the group. But even once she’s gone, the authority behind any action — any addition or removal of members, any further delegation of authority — can always be traced back to the authority inherent in her act of creation.

Some conclusions

We started with two big questions about how we find our footings in a local-first world.

Q: What anchors identity?

A: Preexisting human relationships and interactions.

The Seitan protocol that I decided to use, like any other flavor of PAKE, is complex and involves many cryptographic subtleties. But any invitation protocol, no matter how involved, has something very simple at its core: An interaction that takes place in the context of some existing relationship.

In the beginning there was a relationship.

At first, it felt like cheating, or a cop-out, to rely on a “pre-existing trusted side channel” to transmit this invitation. But it’s not at all. And it’s not a minor thing or an implementation detail either — in fact, it’s the core of this whole thing.

How does Alice know it’s Bob? Because Alice and Bob had that interaction where Alice communicated the invitation code to him, and Bob is able to prove that he’s the one she had that interaction with.

The fact that this pre-existing channel exists at all is very significant; it only exists because there is some kind of pre-existing relationship between Alice and Bob in the real world.

So, that’s our answer: preexisting human interactions and relationships are what anchor identity.

Q: What anchors authority?

A: The act of creation.

When you found a company, you decide who you want to invite to work with you. Depending on how you set things up, you might end up being removed from the company later on. But the authority to do that can ultimately be traced back to delegations of authority you made.

When you start any kind of project, you decide who you want to go on that journey with you, or if you want to go it alone.

When you begin writing a document, you don’t have to share it with anyone - you could keep it to yourself forever. If you do share it, the details are up to you: Do you share it with the whole world? Just with your team? Just with one person? Whose input will you pay attention to?

None of this gives you any power over anyone outside the context of the thing you created: They can choose whether to accept, and they can stop collaborating with you at any time.

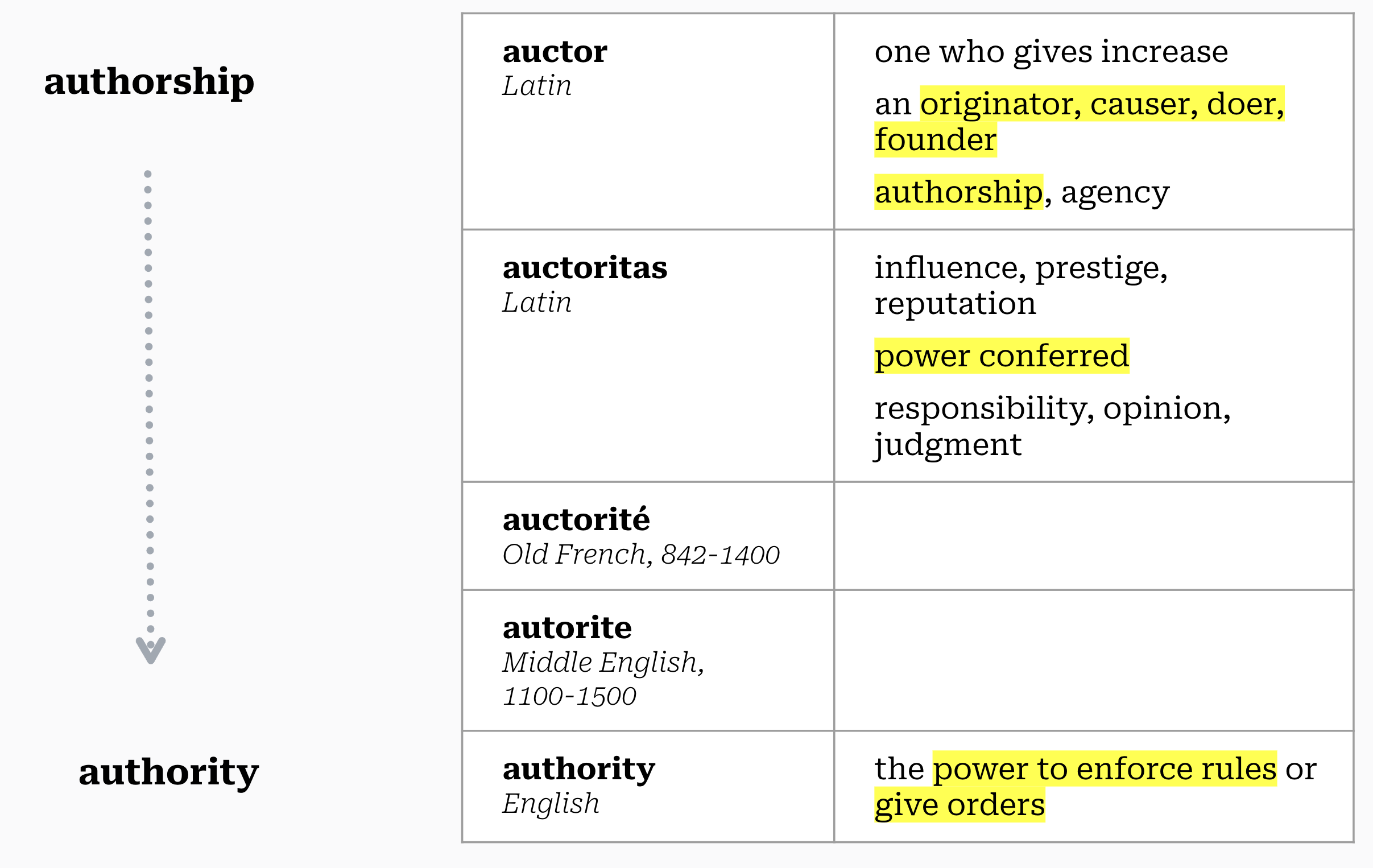

It only recently occurred to me that authority and authorship come from the same root word.

Authority is rooted in authorship, in the act of creation.

So. These are the answers I’ve found to our big questions. I think they’re good answers. As it turns out, not only you can anchor decentralized security on something solid, but the foundation we’ve found is something more legitimate, more satisfying, more human than a distant company’s “because I said so”.

Putting it into practice

If you’re looking to secure a local-first application, take a look at my @localfirst/auth library, which is a working implementation of these ideas, including an auth provider for integration with automerge-repo.

At some point in the process of creating localfirst/auth, I realized that I’d made a custom CRDT; so I extracted the machinery for creating, validating, and interpreting signature chains into the CRDX package. This can be used on its own to create an encrypted, authenticated CRDT with bespoke conflict detection and resolution logic.

If you take any of this out for a spin — or if you have questions about how it works — please get in touch with me — via one of the (pre-existing, trusted) channels listed below!

Thanks

This library’s shape emerged from a series of conversations early on with Peter Van Hardenberg of Ink & Switch. Peter drilled into my head the idea that device keys should be generated locally and never leave the device, and pointed me towards Keybase’s signature chains.

Martin Kleppmann of Cambridge University has been very generous with his time and expertise. Martin is the creator of Automerge and co-author with Peter of Ink & Switch’s local-first manifesto. Much of localfirst/auth is an expression in code of his theoretical work. Every time I’ve been stuck on something, Martin has co-written a paper that solves that exact thing. These two papers were particularly helpful: